In this blog post I am going to look Azure Load Testing, using it to test how much my web application can take before it breaks.

I am currently hosting my Flask (Python) web application within Microsoft Azure. Orignally I tried to go with the cheapest compute option I could find as this project is just to show off the work (i.e. it is not a production system), so I fired up a Standard_B1s (1 VCPU, 1 GiB Memory, 4 GiB Temp Storage). At first it seemed like it was running Ubuntu, Nginx and my Python coding with no major issues. However, after a few manual tests the site would return time outs or act very sluggish.

On investigation the 1 GiB of memory was struggling (hitting close to 100% usuage and then the site timing out), so I decided to scale vertically (more on this later) and upgraded the compute size to a Standard_B1ms (1 VCPU, 2 GiB Memory, 4 GiB Temp Storage). Manually testing showed that memory usage was now leaving between 1.1 GiB and 1.2 GiB memory free most of the time. With the site up and running stably I wondered, what would it take to make the site unresponsive / fail again?

Microsoft Azure currently offers Azure Load Testing in preview. Azure Load Testing creates a new resource in Azure with the options of a quick test (which I will use in this post) and of creating a JMeter script.

The Quick test option asks for a Test URL (i.e. the URL you want to load test), the number of virtual users to test the URL with, how long the test should last (in seconds) and the time to ramp up (in seconds, i.e. how long until all the virtual users are active). Once the variables have been entered Azure will flag how many Virtual User Hours (VUH) the test will consume. This is worth noting as Azure Load Testing pricing varies depending on VUH usage per month, https://azure.microsoft.com/en-gb/pricing/details/load-testing/ .

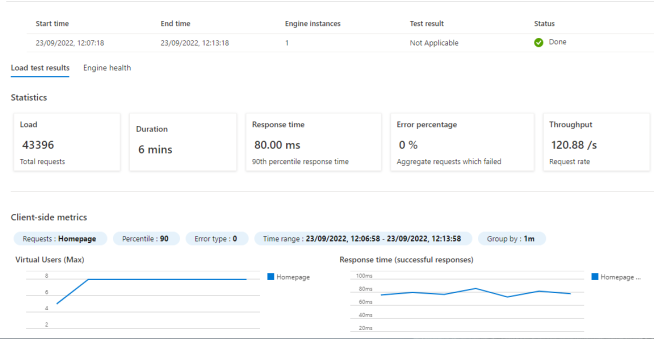

I put in the details for my web application and targeted it with 8 virtual users for 360 seconds (6 minutes). I looked at the monitoring for my Azure compute before, during and after the test so that I could see what the load test did to the compute stats.

CPU usage was low before the test (very much below 10%), during the test it spiked up to around the 100% range and stayed there until after the test. Memory usage stayed dipped a little but not by much, and disk operations / network usage spiked.

The site stayed up / responsive (no errors) although the response times took a hit as they started out around 20 ms, got up to around 90 ms and averaged 77 ms.

The JMeter Script option allows for a JMeter script to be uploaded, and for metrics / thresholds to be set so that response times, latency and errors can be more accurately checked.

You may be wondering why use a Load Tester. Well, for my web application it was simply to test if it continues working under a little load (as it is for my degree apprenticeship and an erroring URL would not look good) however it is more important for a production (live) site. Imagine launching the next big web application, all looks good but every so often users report slowness or, worse, outtages. Testing the load limits helps to understand when a web site / web application may start to struggle, and when it will finally fail.

The Load Tester can also be used in a CI / CD pipeline as part of the testing phase – changes could be pushed to a test system, then put under a load test to see if they increase or decrease performance with the results impacting on if the change is a success or a failure.

Back to an earlier paragraph, I scaled vertically (e.g. increased memory in the compute instance). This was to choose a suitable base compute instance. As the load test shows, this compute instance was suitable for the limited number of users I’m expecting for my degree apprenticeship. However, if I was then going to use a virtual compute instance as a producation system I would not keep scaling vertically. Instead I would keep the Standard_B1s as the compute instance to serve the Flask web application and I would then move the SQL database from SQLite to MySQL and place it on it’s own instance. With those two bits seperated I would place a load balancer in front of the Flask web application and have the Flask web application compute instances scale horizontally as needed. Or, I’d containerise the Flask web app and MySQL, stick a load balancer in and let the container scale as needed. Scaling horizontally would allows and increase in resources when needed, and a decrease when not.

More information about Azure Load Testing can be found at https://azure.microsoft.com/en-gb/services/load-testing/ .